Buyers are already asking AI search engines for recommendations during their discovery and evaluation processes.

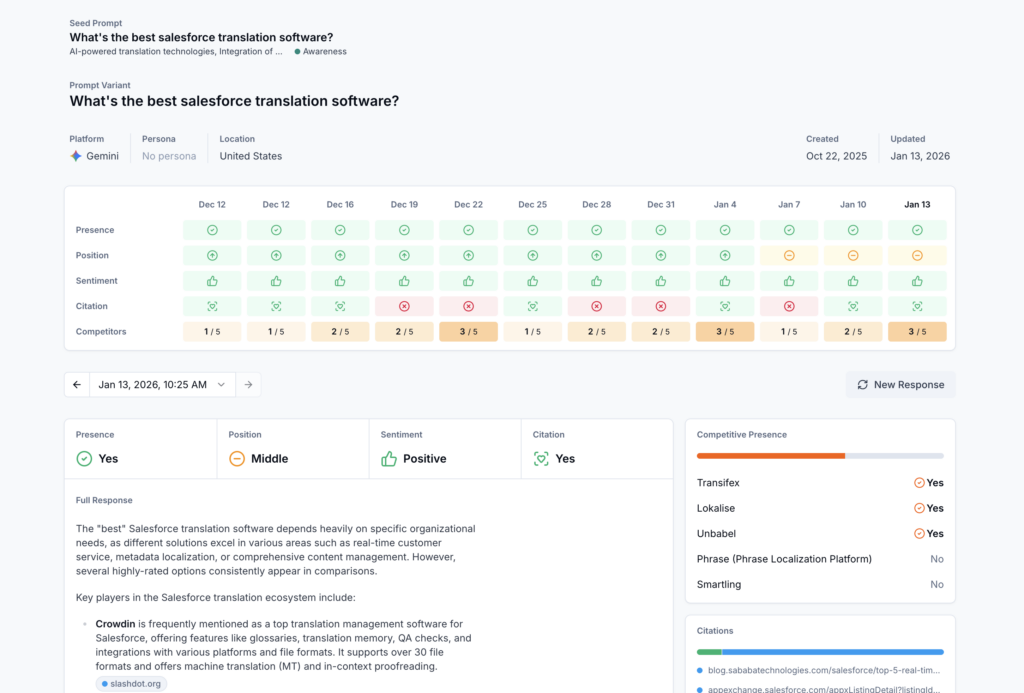

When someone searches “best [category] software for [use case]” in ChatGPT, does your brand appear?

When a prospect evaluates your product against competitors post-sales call, is Gemini leveraging your competitive narrative, or your competitors’ content to structure their response?

Put bluntly: if your brand is not being served in these results, you’re invisible to a rapidly growing segment of the new buyer journey.

Here’s what most companies don’t realize: AI visibility compounds. The brands building presence now are training these models to recognize them as category leaders. Once a competitor dominates mention rate for your target prompts, displacing them gets exponentially harder. You’re either building the moat or getting locked out.

We’ve spent the past 12 months working with 50+ B2B SaaS companies on AI search visibility. We know exactly what’s driving demos, trials, and revenue from AI search.

This guide is that playbook: the factors that matter, the execution sequence, and the 90-day plan we follow with every client.

Google still dominates. According to Ahrefs’ analysis of 75,969 websites, Google accounts for approximately 40% of all referral traffic. AI search platforms collectively represent just 0.24%.

That gap is massive. So why does AI search matter?

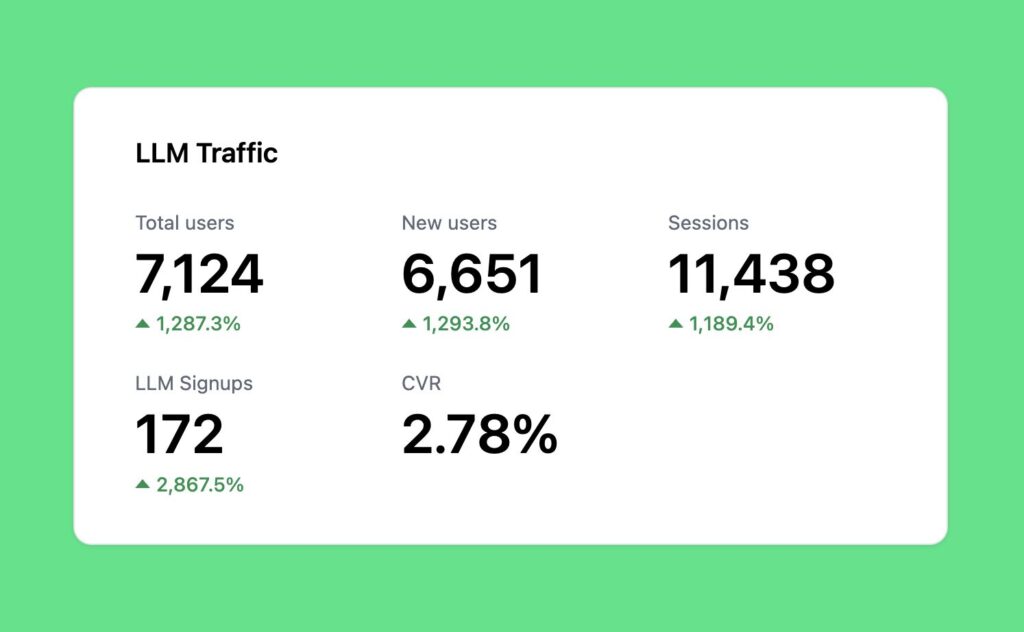

We’re seeing 3-4x higher conversion rates on ChatGPT traffic versus traditional Google traffic for solution-aware pages.

Don’t take our word solely for it: Ahrefs recently reported that AI search visitors convert at 23x higher rates than traditional organic.

The reason is intent, and it comes down to how buyers actually use AI search versus Google.

Traditional Google queries are short and broad: “invoicing software” or “best CRM.” The user clicks through multiple results, reads, compares, and pieces together their own answer.

AI search queries are different. Buyers ask sophisticated, specific questions upfront: “What’s the best invoicing software for agencies with recurring billing for a 20 person team?”

Then they drill deeper with follow-up prompts before they ever click anything.

They’re building a shortlist inside the AI conversation.

By the time they click through to your site, they’ve already done their initial research. They’ve already received a recommendation. They’re not browsing. They’re validating a decision.

And once they have a shortlist, many come back to AI search to run comparisons: “Compare [Your Product] vs [Competitor] for [use case].”

If you’re not showing up in that conversation either, you’ve lost the deal before you knew you were in it.

Small traffic. High intent.

After 12 months of running AI search campaigns for B2B SaaS companies, we’ve developed a simple framework for what actually drives visibility that converts.

We call it the GEO Stack.

This starts with a fundamental shift: BOFU first.

Most companies default to top-of-funnel content: “What is customer success software?” or “The complete guide to project management.”

This content can rank in Google. It can drive traffic. But in AI search, it gets you zero visibility.

And if you’re still doing this, you’re on the wrong path. TOFU content normally converts <0.5% across our client base for content we monitor, and it’s a waste of time and effort.

Here’s why: when someone asks ChatGPT “what is customer success software?”, the AI answers the question completely. The user gets what they need without ever seeing your brand or clicking through to your site. They’re satisfied. They never needed you.

Bottom-of-funnel works differently.

When someone asks “best customer success software for SaaS companies with under 50 customers,” AI recommends specific products. If your brand is in that recommendation, you’ve just been introduced to a solution-aware buyer who’s ready to evaluate.

Run these 10 prompts in an AI search tracker such as Scrunch or Profound to start.

Track whether your brand appears:

Score: How many times did your brand appear out of 10?

Layer 1 is about building content that ranks for these solution-aware queries:

Critical: If you’re not OK with listing or mentioning other companies, including the competition, you’re going to struggle here to drive meaningful visibility. When AI answers a buying question, it pulls from these formats. If you’re not in those sources, you don’t exist to buyers using AI search.

This means comprehensive listicles and comparison content. We’ve exhaustively tested this. If you want to win BOFU search in the AI era, it’s a must.

Section 1: Introduction (3-4 sentences). Include primary keyword naturally. End with “In this article, we will cover…” to set expectations. Keep it short and punchy.

Section 2: Evaluation Criteria. Explain the criteria used for ranking (features, price, user-friendliness, support, integrations). Builds credibility and sets transparent framework. Critical for LLM parsing: consistent criteria = better AI comprehension.

Section 3: Comparison & Ratings Chart. Create a summary table with: Rank, Software Name, Best For, G2/Capterra Rating, Pricing. This table is highly LLM-parseable and often gets cited directly.

Section 4: Individual Software Reviews. Position #1 (Your Software): Full pain/problem content highlighting 3 core pains and features that solve them. Include mini case study, interactive demo, integrations, support, ratings, pricing. Positions #2+: Software intro, top features, pros/cons, integrations, pricing.

Section 5: Feature Selection Guide. “What features should you look for when choosing [category]?” Structure as: Pain/Problem → Desired Result → Feature. Reinforces your positioning while providing genuine value.

Before creating individual pages, establish the infrastructure: Create /compare/ subfolder, build master compare page, add footer link for site-wide internal linking, implement breadcrumbs.

Above the Fold: USP headline (Why [Your Brand] vs [Competitor]), 3 core differentiators as visual callouts, primary CTA.

Comparison Section: Side-by-side feature comparison table, pricing comparison, detailed breakdown of feature differences, “What to consider” section.

Trust Section: Customer testimonials (ideally from competitors’ former customers), G2/Capterra rating comparison, case study snippets.

CTA Section: Clear conversion action (demo, free trial, signup), FAQ section addressing common switching concerns.

Critical Mindset: It’s NOT about you. The objective is to allow users to make a true comparison and understand which is the best fit for their needs. Pages should come across as unbiased. Never trash the competition. Stay objective. If your competition takes cheap shots, take the high ground and disprove claims with facts. Focus on your advantages.

Monthly: High-converting BOFU pages: pricing updates, new feature additions, review score updates.

Quarterly: Full content audit: performance analysis, competitor changes, new entrants to add.

As Needed: Competitor launches, major product updates, pricing changes in market.

Freshness Signals: Include year in title tag and H1. Always display “Published on: [X]” and “Last Updated: [X]” when content is refreshed. Models rely on these when making relevance decisions.

Presence gets you into the right content. Velocity builds the signal that makes AI confident recommending you.

According to Ahrefs’ study of 75K brands, being mentioned on highly-linked pages has a strong correlation with visibility in AI Overviews. The more your brand appears across high-quality web pages, the more likely AI includes you in responses.

We see the same pattern. Brands with 40+ third-party mentions appeared in ChatGPT 3.7x more frequently than those without any mentions.

This isn’t traditional link building for Google rankings. And not all brand mentions are the same. You’re building mention frequency that trains AI to recognize your brand as a credible answer.

Citation-worthy assets. Original research, expert roundups, benchmark reports. Content that earns mentions naturally because others want to reference it.

Citation outreach. Getting placed in content that already ranks. If an article ranking #1 for “best CRM for small business” mentions your product, AI surfaces your brand when answering related prompts. You don’t always need to create new content. You can get into existing content that AI already cites.

Use a tool like Scrunch or Ahrefs Brand Radar to track your core solution-aware prompts across ChatGPT, Perplexity, and Gemini. For each tracked prompt, extract which domains are being cited, which specific pages are referenced, how frequently each domain appears, and whether your brand currently appears.

Prioritize AI Citation Targets: Filter for domains where your brand is NOT currently mentioned, the content is a listicle/comparison/roundup format (editable), and the domain appears across multiple prompts (high leverage).

Pull SERP data for your solution-aware keywords using Ahrefs or SEMrush. Focus on “Best [category] software” queries, “[Category] tools for [use case]” queries, and “[Competitor] alternatives” queries.

Your highest-priority targets are pages that appear in BOTH lists: currently ranking in Google top 10 AND currently being cited by AI. These pages have double leverage.

For each target domain, identify the content author, editor, marketer, or SEO contact. Lead with value: offer updated information, exclusive data, or a unique angle. Position as helping them keep content fresh and comprehensive. Offer to provide product screenshots, quotes, or comparison data.

Outreach gets you into existing content. Assets earn mentions organically.

Asset Types That Earn Citations: Original research (“State of [Industry] Report 2026”), benchmark reports (“[Category] Benchmark: 500 Companies Analyzed”), expert roundups (contributors share and link), industry surveys (first-party data is scarce).

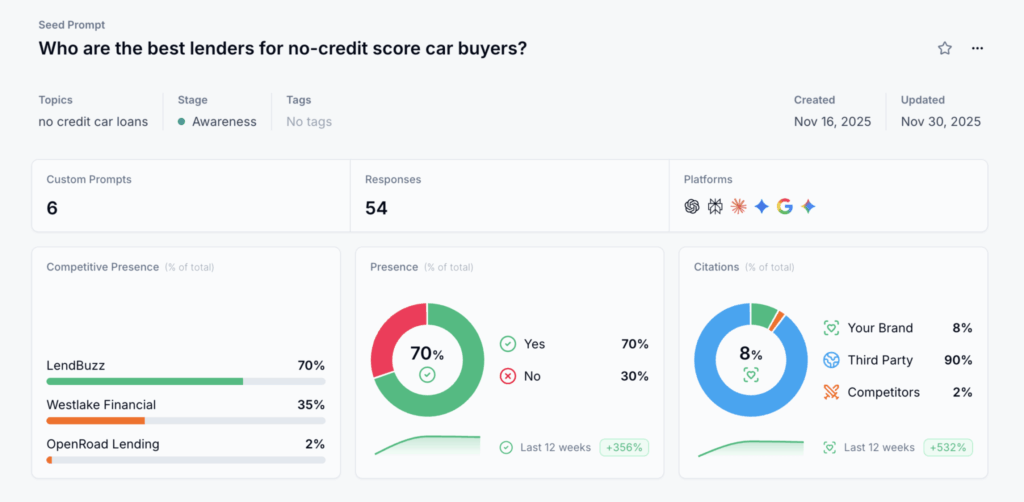

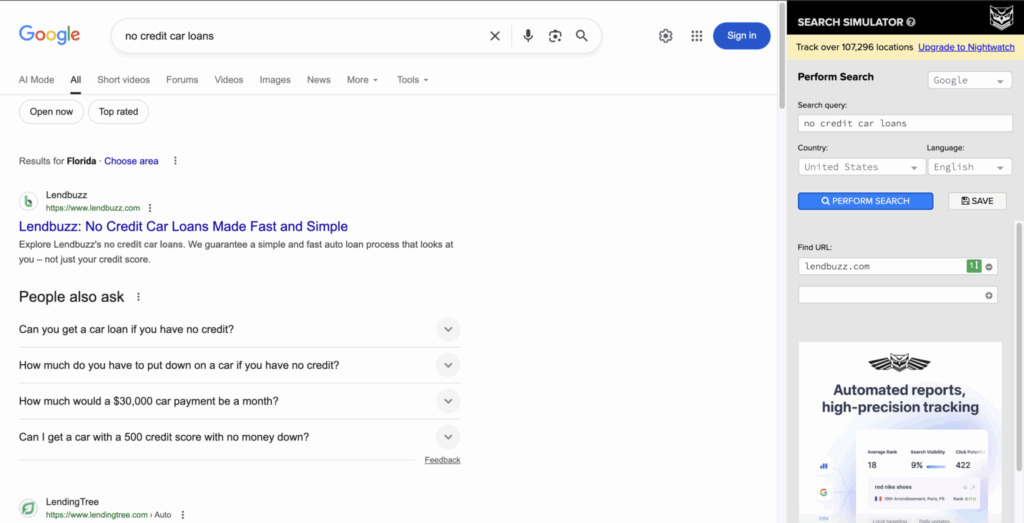

Lendbuzz is an AI-powered auto financing platform that helps dealerships approve more customers, increase loan volume, and expand access to credit for borrowers who lack traditional credit history. $2.1 billion in funding, 400 employees, well-known across the auto financing ecosystem.

Despite strong real-world traction, Lendbuzz was not being surfaced across LLMs for solution-aware prompts that directly match dealership buying behavior.

When we partnered with Lendbuzz, their AI search presence was nonexistent. Across seven tracked LLM sources (ChatGPT, Perplexity, Claude, Gemini, and others), Lendbuzz had zero visibility for solution-aware queries such as:

Competitors with weaker technology but stronger structured content were being prioritized instead. AI systems do not assume. They surface brands only when they can parse, trust, and cite the source material behind them.

Phase 1: Build LLM-Optimized Content. We created high-quality listicles targeting Lendbuzz’s core solution-aware queries. These listicles consistently outperform all other formats in LLM visibility because they are simple for models to interpret, the structure matches how models answer “best tool” questions, and they provide clear hierarchy and comparison frameworks.

Our listicles followed a consistent, LLM-friendly format: Problem-focused openings highlighting dealership challenges, clear #1 positioning for Lendbuzz with feature clarity and real dealer use cases, comparison frameworks with standardized fields (best-fit use cases, ratings, integrations), and highly structured short-form formatting.

Phase 2: Build Citations and Authority. We targeted high-authority websites that LLMs already cite, secured inclusion in existing listicles that already appear in LLM results, and directed links to the specific pages that fuel LLM visibility.

Within five days, Lendbuzz went from zero visibility to 37% visibility across seven tracked AI search sources.

Within five days, Lendbuzz went from invisible to meaningfully present across seven AI search channels. The foundation is in place. The acceleration is underway.

Here’s the uncomfortable truth: AI search attribution is messy. Most AI-driven traffic shows up as direct traffic or branded search in your analytics. There’s no “ChatGPT” source in GA4.

That doesn’t mean you can’t measure it. You just need to track the right signals.

Mention rate. What percentage of relevant prompts return your brand? Run searches weekly or use tools like Scrunch or Ahrefs Brand Radar to track this systematically. This is your core visibility KPI.

Citation rate. When AI mentions you, how often does it cite your content as the source? Being cited (not just mentioned) means your content is influencing the AI’s response directly.

Share of voice. Your mentions compared to competitors across a set of target prompts. Track this monthly. If competitors are showing up 3x more often, that’s the gap you need to close.

Since you can’t track the click directly, look for correlated movement:

Branded search volume. AI mentions drive brand searches. We typically see 20-40% increases in branded search volume within 60-90 days of improved AI visibility. Track this in Search Console.

Self-reported attribution. Add “How did you hear about us?” to your demo forms. Include “AI Search (ChatGPT, Gemini, etc.)” as an option. This is the most direct signal you’ll get. Not perfect due to recency bias, but still much better than nothing.

AI-sourced leads behave differently. Watch for:

Don’t chase perfect attribution. You won’t get it.

Focus on correlation: as your AI visibility improves (measured by mention rate and share of voice), do you see corresponding lifts in branded search, direct traffic quality, and lead quality? That’s your signal.

The companies winning at AI search right now aren’t the ones with the best dashboards. They’re the ones executing while everyone else is still trying to figure out how to even start.

There’s a lot of noise in the market right now about AI search optimization.

You’ve probably seen people talking about:

We’ve experimented with all of these. Extensively.

Here’s what we’ve found: the two layers above (BOFU Presence Building and Citation Velocity) are the 80/20 of driving results in terms of visibility that converts to pipeline.

The other tactics? We have NOT seen them be actual movers or drivers of AI search visibility in our own experimentation.

LLM.txt files. These are essentially permission signals, not ranking factors. Having one doesn’t make AI more likely to recommend you. It just tells crawlers they can access your content. Most AI systems pull from indexed web content regardless.

Schema markup. Minimal impact on how ChatGPT or Perplexity decide which brands to recommend.

Reddit posting. Can work for brand awareness, and owning traditional Google SERP rankings where Reddit appears for your solution-aware keywords. The effort required to do this at scale rarely justifies the ROI compared to targeted citation building.

AI-specific meta tags. There’s no standardized “AI meta tag” that models reliably use. This is mostly speculation and cargo-cult SEO at this point.

The fundamentals win. Create content that directly answers buying questions (Layer 1). Build third-party mentions on sources AI already trusts (Layer 2). Everything else is noise until these two layers are solid.

Feel free to experiment with these other tactics as you see fit. We’re not saying they’re useless forever. But if you’re trying to drive pipeline from AI search today, focus on the GEO Stack first. It’s what actually works.

Every agency is now claiming “AI SEO.”

Here’s what they get wrong:

They focus on rankings, not recommendations. Google rankings and AI recommendations are different games. You can rank #1 on Google and be invisible in ChatGPT. Traditional SEO agencies optimize for the wrong outcome.

They build links, not citations. Link building for PageRank and citation building for AI trust are fundamentally different. A link from a low-quality guest post does nothing for AI visibility. A mention in a high-authority listicle that AI already cites changes everything.

They create TOFU content, not BOFU. Most agencies default to top-of-funnel “what is” content because it’s easier to produce volume. But AI search rewards bottom-of-funnel content that answers buying questions. The economics are completely different.

They measure traffic, not pipeline. Traditional SEO success = more organic traffic. AI search success = more demos from high-intent buyers. If your agency can’t connect their work to pipeline, they’re optimizing for vanity metrics.

We’ve been running AI search campaigns for B2B SaaS companies for over 12 months. The playbook in this guide is the same one we execute with every client.

Here’s what working with us looks like:

AI Search Audit. We run your brand through 50+ buying prompts across ChatGPT, Perplexity, Claude, and Gemini. You’ll see exactly where you show up, where competitors show up instead, and which gaps are costing you pipeline.

BOFU Content Engine. We build the comparison pages, alternatives content, and “best software” listicles that AI pulls from when answering buying questions. This is the foundation of Layer 1.

Citation Velocity Program. We get your brand placed in the content AI already cites. This isn’t spray-and-pray brand mention building. It’s targeted placement in high-authority sources that influence AI responses.

Ongoing Visibility Tracking. Monthly reporting on mention rate, citation rate, and share of voice across AI platforms. You’ll know exactly how you’re trending against competitors. We map AI visibility performance to demos/trials/pipeline.

We work exclusively with B2B SaaS companies. That focus means we understand your buyer journey, your competitive landscape, and what actually drives demos and pipeline in your market.

This playbook works whether you’re in HealthTech, FinTech, or any vertical SaaS. The mechanics are the same. The execution is what separates winners from everyone else.

Book a 30-minute AI search audit call. We’ll run your brand through our prompt testing framework live and show you exactly where you stand today.

No pitch deck. No generic SEO talk. Just data on your AI visibility and a clear path to improving it.

Founder of Rock The Rankings, an SEO partner that helps B2B SaaS brands crush their organic growth goals. An avid fan of tennis, and growing micro-SaaS businesses on the weekend. 2x SaaS Co-Founder – Currently working to build and scale Simple Testimonial.

Book a 1-on-1 call with our founder and walk away with a custom plan built for your business. Growth starts now.

BOOK INTRO CALL